Reliability_and_validity by Careershodh

Reliability_and_validity 2 by careershodh

Meaning of validity

Validity refers to the question: “Does the test measure what it claims to measure”?

The word “valid” comes from the Latin ‘validus’, it meaning strong. Validity is the accuracy of test or experiment.

- Concept of validity given by Kelly (1927) and defined validity as “a test is valid if it measures what it claims to measure”.

- Logically, validity is the property of an argument made up of on the fact- ‘the truth of the premises, guarantees the truth of the conclusion’.

- Test Validity refers to the meaning and usefulness of test results.

- Validity of an assessment is the degree to which it measures what it is supposed to measure.

- More specifically, validity refers to the degree to which a certain inference or interpretation based on a test is appropriate.

- Validity of a test means, What the test measures and how well it does?

- Ex – Consensciousness.

- Actual concept ?

- Aspects of concept ?

Definition of Validity

“Validity is the degree to which evidence and theory supports the interpretation of test scores entailed by the uses of the test.”

“An index of validity shows the degree to which test measures what to measure when compared with accepted criterion” –Freeman

History of Validity or evolving concepts of validity

1st stage of development of Validity –

- Earliest uses of tests was in the assessment of what individual had learned. Now it is on the end of course.

- Example. Semester end exams.

- For achievement test –comparing its content with

- Content domain designed to assess.

- It is still relevant/ applicable.

2nd stage of development of validity

- It shift to prediction.

- How will people respond in given situation- now & future?

- Here test validity means correlation coefficient between test scores and direct & independent measures of that criterion

- Useful in selection and placement -education, job, treatment etc.

3rd Current stage of development of validity

- – 2 major trends

- Strengthen theoretical orientation.

- A close link between psychological theory and verification through empirical and experimental hypothesis testing

- These trends recognized the value of construct.

Construct –

- Broad category

- Derived from the common feature shared by directly observable behavior.

- Theoretical entities, not directly observed

It leads to introduction of construct validity as fundamental and all-inclusive validity

Validity Coefficient

- It is the relationship between a test and a criterion is usually expressed as a correlation.

- This coefficient tells the extent to which the test is valid for making statements about the criterion.

- Validity coefficients in the range of .30 to .40 are commonly considered high.

- Validity coefficient is statistically significant or not not significant is not important.

- Issues of concern when interpreting validity coefficients.

- Look for changes in the cause of relationships.

- The logic of criterion validation presumes that the causes of the relationship between the test and the criterion will still exist when the test is in use.

- What does the criterion mean? Criterion-related validity studies mean nothing at all unless the criterion is valid and reliable.

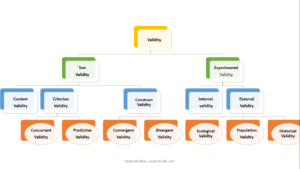

Types of validity by Careershodh

Test Validity

Test validity is an indicator of how much meaning can be placed upon a set of test results.

Types of Test Validity

There are three types of evidence:

(1) Construct Validity -Construct-related

(2) Criterion Validity -Criterion-related

(3) Content Validity – Content-related

Face validity (not a pure Validity type)

Face validity is simplest form of validity.

Face validity is the mere appearance that a measure has validity.

Items, statements, or question should be reasonably related to the perceived purpose of the test.

Face validity will be used for ability tests and achievement tests.

For example, any school / college test will have this face validity.

For instance – If a scale to measure anxiety

Items, statements, or question for face validity of anxiety test will be –

- “My stomach gets upset when I think about taking tests”

- “My heart starts pounding fast whenever I think about results.”

- If they say ‘yes’ to both question, Can we conclude that person is anxious?

- No! – Validity requires evidence in order to justify conclusions.

- Face validity is not validity at all, because it does not offer evidence to support conclusions drawn from test scores.

- Face validity is when a test appears to measure a certain criterion; it does not guarantee that the test really measures phenomena in that factor.

- But it is crucial to have face validity; for a test that “looks like” it is valid.

- These appearances can help to motivate test takers by showing relevance.

1. Content Validity or Description validation procedures

- It estimate of how much a measure represents every single element of a construct or said concept.

- It is a systematic examination of test content to determine whether it covers a representative sample of all dimensions / domain to be measured.

- This valorization procedure used in test design to measure how well person mastered the skill.

- Domains, sections, types, etc should be fully described in advance.

- Content must be defined broadly to include major objectives- application of principles, the interpretation of data, factual knowledge, etc.

- Validity depend on person test response to the behavior area under consideration rather than on appearance of item content.

Specific procedure to develop Content Validity

- Choice of appropriate items/ statements/ questions

- Systematic examination of course syllabi ,textbook

- Consultation of Subject matter experts (SMEs)

- Test specification – content areas ,instruction objectives/process, important of individual topics ,no of items

- Discussion of content validation should be in the test manual .

- Empirical procedure to establish content validity should cover total scores and item scores.

- Supplementary procedure to establish content validity should do analysis of types of errors.

- Content validity establishment should consider the adequacy of representation of the conceptual domain the test is designed to cover.

- Traditionally, content validity evidence has been of greatest concern in educational testing.

- The unique features of content validity is being logical rather than being statistical. Like face validity .

- For establishing content validity – requires good logic, intuitive skills, and perseverance.

Two new concepts in development of content validity-

1.Construct underrepresentation

Construct underrepresentation describes the failure to capture important components of a construct.

For example, if a test of mathematical knowledge included algebra but not geometry, the validity of the test would be threatened by construct underrepresentation.

2. Construct-irrelevant variance

- Construct-irrelevant variance occurs when scores are influenced by factors irrelevant to the construct.

- For example, a test of intelligence might be influenced by reading comprehension, test anxiety, or illness.

Application of Content Validity –

- Content Validity can be appropriate for Educational test, Achievement test, Employee selection and Employee Evaluation Classification.

- Content Validity will be inappropriate for aptitude and personality test.

Limitations of Content Validity –

For example, many students do poorly on tests because of anxiety or reading problems

2. Criterion Validity or criterion Related Evidence for Validity

- Criterion validity evidence tells us just how well a test corresponds with a particular criterion.

- A criterion is the standard against which the test is compared.

- For example, a test might be used to predict which engaged couples will have successful marriages and which ones will get divorced.

Criteria will be -Marital success

Type of Criterion Validity

1.Predictive validity related to evidence for validity—

The forecasting function of tests is actually a type or form of criterion validity evidence known as predictive validity evidence.

The SAT/ GRE, including its quantitative and verbal subtests, is the predictor variable, and the college grade point average (GPA) is the criterion.

Aptitude tests taking by employers, companies will another example of predictive validity.

The purpose of the test is to predict the likelihood of succeeding on the criterion—i.e., achieving a high GPA in college.

2. Concurrent Validity related evidence for validity

Concurrent Validity take place when the criterion measures are obtained at the same time as the test scores.

When the measure is compared to another measure of the same type, they will be related.

It shows the extent to which the test scores precisely estimate an person’s current state with respects to the criterion.

Concurrent Validity comes from assessments of the simultaneous relationship between the test and the criterion such as between a learning disability test and school performance.

Concurrent evidence for validity applies when the test and the criterion can be measured at the same time.

For example, on a test of depression, the test should have concurrent validity if it measured the current levels of depression experienced by the person who has taken test.

Application of Concurrent validity

Most of the psychological test use this validity.

Concurrent validity mostly used in industrial sector.

3. Construct Validity

Construct validity defines how well a test or experiment measures up to its claims.

A test created to measure depression, it must only measure that specific construct, not closely related ideals like stress or anxiety.

Construct validity is if the test demonstrates an association between the scores and the prediction of a theoretical trait, concept, attribute, etc.

By the mid-1950s, researchers / investigators concluded that no clear criteria existed for most of the social and psychological characteristics they wanted to measure.

For example- measures of intelligence, love, curiosity, or mental health.

All of these tests should have construct validity.

There was no criterion for intelligence because it is a hypothetical construct.

A construct is defined as something built by mental synthesis.

As a construct, intelligence does not exist as a separate thing we can touch or feel, so it cannot be used as an objective criterion.

Construct validity can established through a series of activities in which a researcher simultaneously defines some construct and develops the instrumentation to measure it.

- T. Campbell and Fiske (1959) introduced an important set of logical considerations for establishing evidence of construct validity. They distinguished between two types of evidence essential for a meaningful test: convergent and divergent /discriminant.

Two types of Construct validity by Campbell & Fiske (1959)

1.Convergent validity

Tests that constructs that are expected to be related are, in fact, related.

When a measure correlates well with other tests believed to measure the same construct, convergent evidence for validity is obtained.

In each case, scores on the test are related to scores on some other measure.

However, there is no criterion to define what we are attempting to measure.

Convergent validity is obtained in one of two ways.-

- We show that a test measures the same things as other tests used for the same purpose.

- We demonstrate specific relationships that we can expect if the test is really doing its job.

2. Discriminant or Divergent Validity

Tests that constructs should have no relationship do, in fact, not have any relationship.

It shows that the measure does not include superfluous items and that the test measures something distinct from other tests.

Experimental Validity

The validity of the design of experimental research studies is a central part of the scientific method, and a concern of research ethics. Without a valid design, valid scientific deductions cannot be drawn.

Types of Experimental Validity

Internal Validity

Internal validity is a measure, which confirms that a researcher’s experiment design carefully follows the principle of cause and effect.

An inductive evaluation of the degree to which conclusions about causal relationships can be made (e.g. cause and effect), based on the measures used, the research setting, and the whole research design.

In-other-words there is a causal relationship between the independent and dependent variable.

Internal validity can be improved by regulatoring extraneous variables, using standardized instructions, counter balancing, and eliminating demand characteristics and investigator effects.

2. External Validity

External validity is about generalization: To what extent can an effect in research, be generalized to populations, settings, treatment variables, and measurement variables?

External validity concerns the extent to which the (internally valid) results of a study can be held to be true for other cases, for example to different people, places or times.

External validity can be improved by setting experiments in a more natural setting and using random sampling to select participants.

External validity is usually split into two distinct types,

- Population validity – other people

- Historical validity – over time

- Ecological validity – the extent to which research outcomes can be applied to real life circumstances outside of investigation sets

Both essential elements in judging the strength of an experimental design.

Reference books for validity and types of validity

Anastasi, A., & Urbina, S. (1997). Psychological testing (7th ed.). Prentice Hall/Pearson Education.

https://www.simplypsychology.org/validity.html#ext

Niwlikar, B. A. (2020, October 11). What Is Validity & Its Definition, History, Types ?. Careershodh. https://www.careershodh.com/validity-meaning-definitions-types-internal-validity-construct-validity/