Introduction of Item Analysis

|

Importance of Item Analysis :

Items (questions) are representative of the psychological test measuring the particular construct, may it be an ability, aptitude, personality, attitude or any psychological trait (Anastasi & Urbina, 2009). Hence, the quality of the test decides on quality of the items in it. During test construction, test maker produces a large pool of test items and needs to select limited best possible items to create a highly reliable and valid test. Item analysis is the process that helps test maker to evaluate the test items and determine which items should be retained, which revised, and which thrown out (Gregory, 2015).

Types of Items

DeVelli’s (1991) provided several simple guidelines for item writing.

- Define clearly what you want to measure: as specific as possible.

- Generate an item pool. Avoid exceptionally long items, which are rarely good.

- Keep the level of reading difficulty appropriate for those who will complete the scale.

- Avoid “double-barreled” items that convey two or more ideas at the same time.

- For example, consider an item that asks the respondent to agree or disagree with the statement, “I vote This party because I support social programs.”

- Consider mixing positively and negatively worded items. Sometimes, respondents develop the “acquiescence response set.”

Item Formats

- The dichotomous format.- 2 Point Yes/No; True/False

- The polytomous format. where we see 3 more points / options

- The Likert format – 5 point

- The category format. – 10 point rating scale

- Checklists and Q-sorts.

Definition of Item Analysis

According to American Psychological Association (2018), item analysis is a set of procedures used to evaluate the statistical merits of individual items comprising a psychological measure or test.

There are two criteria that are used to decide whether the item is good or not:

Item Analysis : Item Difficulty & Item Discrimination

1. Item Difficulty

- Denoted by ‘p’ (proportion of test takers who answered correctly).

- Classical item analysis statistics for knowledge-based tests.Measure for knowledge-based tests-

- Achievement or

- Ability

- IQ test

Item difficulty is defined by the number of people who get a particular item correct.

- For example – if 76% of the students taking a particular test get item no. 24 correct, then the difficulty level for that item is .76.

- If 40% solving the answer correctly then Item difficulty Level is .40

- If 15% solving the answer correctly then Item difficulty Level is .15

Argument – these proportions do not really indicate item “difficulty” but item “easiness.”

The higher the proportion of people who get the item correct, the easier the item (Allen & Yen,1979).

Definition of Item Difficulty :

The item difficulty for a single test item is defined as the proportion of examinees in a large try-out sample who get that item correct.

– Gregory R.J (2015)

Item difficulty index (p) = no. of correct responses/ total no. of responses

Lets understand the definition with a simple example :

If a test measuring mathematical ability is administered to the class of 100 students and a test maker see the following result for each test question (item) :

Question (Item) No. | No. of student solved the question CORRECTLY |

| 1 | 100 |

| 2 | 85 |

| 3 | 50 |

| 4 | 20 |

Here what we observe :

Question 1 is solved correctly by ALL the students in the classroom, where only 20 students are able to solve Question 4. This proportion of correct answers indicates how hard or easy the question was for the given class of students. We can say, the more students are able to solve the question, the easier it is. Hence, the Question 1 is the easiest question, while Question 4 is the most difficult question.

More individuals can solve correctly -> Easy item Less individuals can solve correctly -> Difficult item |

Question (Item) No. | No. of student solved the question CORRECTLY (a) | Item Difficulty Index (a/N) | Interpretation |

|---|---|---|---|

| 1 | 100 | 100/100 = 1 | Very Easy : Discard |

| 2 | 85 | 85/100 = 0.85 | Easy : Modify to increase Difficulty |

| 3 | 50 | 50/100 = 0.5 | Moderate : Keep |

| 4 | 05 | 5/100 = 0.05 | Very difficult : Discard |

*A = No. of students who solved question correctly = correct no. of responses

N = Total no. of students in the class = 100

Hence, higher the item difficulty index, the easier items are.

Item Difficulty Index

The item difficulty index is a measure used in test analysis to determine how challenging each question (or item) is for a group of test-takers. It is calculated as the proportion of students who answered the item correctly, typically ranging from 0 to 1. A higher index (closer to 1) indicates an easier item that many students answered correctly, while a lower index (closer to 0) signifies a more difficult item.

The item difficulty for a single test item is defined as the proportion of examinees in a large tryout sample who get that item correct (Gregory, 2015).

An ideal test includes a mix of items across a range of difficulty levels, allowing for a more accurate assessment of different levels of understanding within the group. This index helps educators and test developers ensure that tests are balanced and measure learning effectively.

Formula —- Item difficulty index (p) = no. of correct responses/ total no. of responses

Role of item difficulty in analyzing the test item?

The optimal difficulty of an item should be between 0.3 to 0.7. This range avoids ceiling effect and floor effect in the test scores.

Floor Effect : Large number of test-takers achieve the lowest possible score – occurs if items are too difficult.

Ceiling effect : Large number of test-takers achieve the highest possible score – occurs if items are too easy for the target population.

The majority of items should be moderately difficult. When a test has many items that are too difficult or too easy, the test scores are clustered at only one end of the curve. This prevents the recognition of differences in the traits or abilities of various individuals.

Drawback of Item Difficulty Index :

Since item difficulty measures the number of correct responses for a given item, it requires the item to have a definitive correct answer. Therefore, the item difficulty index is applicable mainly to ability, aptitude, and achievement tests, where right or wrong answers are present. In contrast, personality and attitude tests do not have correct answers, making the concept of item difficulty irrelevant and unhelpful in these cases.

2. Item Discrimination

The primary purpose of most tests is to identify and measure variations among individuals. Discriminating between high and low performers allows for an accurate assessment of where each test-taker stands in terms of ability, knowledge, or trait.

Definition of Item Discrimination

An item-discrimination index is a statistical index of how efficiently an item discriminates between persons who obtain high and low scores on the entire test.

– Gergory R.J (2015)

Extreme Group Method:

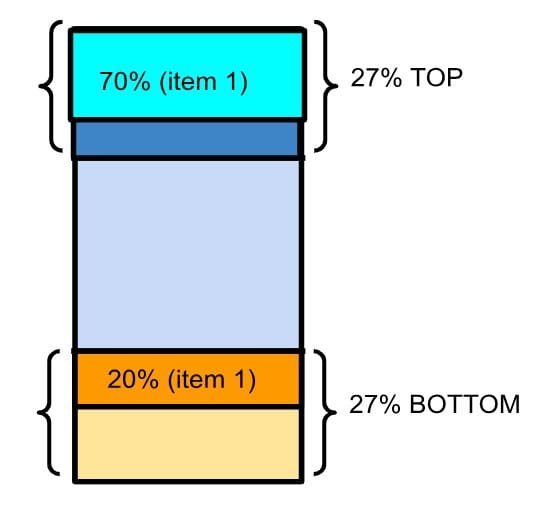

A Common practice used to find discriminating ability of an item is to consider the performance of the test takers scoring extremes at the test. Kelley (1939) proposed, for the normal distribution of scores, the upper and lower 27 percent of population is considered to calculate the item discrimination.

Item Discrimination Index (D) = Proportion of Top Scorers (U) – Proportion of Bottom Scorers (L)

| Item No. | Proportion of Top Scorers (U) | Proportion of Bottom Scorers (L) | Item Discrimination Index (D) = U – L | Interpretation |

| 1 | 0.70 | 0.20 | 0.7-0.2 = 0.5 | Moderate : Modify to increase index |

| 2 | 0.60 | 0.40 | 0.20 | Very low : Discard |

| 3 | 0.90 | 0.27 | 0.63 | High : Keep |

| 4 | 0.20 | 0.40 | -0.20 | Negative : Faulty item |

Representation of Item Discrimination of Item 1

The item discrimination Index (D) ranges from -1 to +1. Higher the discrimination index is, the more an item can discriminate between high top scorers and bottom scorers. The optimum discrimination index should be above 0.5.

Item Endorsement Index

Unlike the item difficulty index, the item discrimination index can be used for both ability tests and personality tests. In personality and attitude tests, the discrimination index is often referred to as the ‘item endorsement index,’ where positive responses on a test item are counted.

For example, on a 5-point Likert scale ranging from ‘Strongly Agree’ to ‘Strongly Disagree,’ responses such as ‘Strongly Agree’ and ‘Agree’ will be counted to differentiate between individuals who are high and low on the measured trait.

Item Characteristic Curve (ICC)

- An item characteristic curve is a graphic representation of item difficulty and

discrimination (Cohen, R., & Swerdlik, M., 2009). - An item characteristic curve (ICC) is a graphical display of the relationship between the probability of a correct response and the examinee’s position on the underlying trait measured by the test. It is also known as an item response function (Gregory R. J, 2015).

- An item characteristic curve is a graphic representation of item difficulty and

Item Characteristic Curve is based on Item Response theory. It is a modern approach to test design and analysis that focuses on understanding the relationship between individual test items and a test-taker’s underlying traits or abilities.

The ICC plots ability levels (often on the x-axis) against the probability of a correct response (on the y-axis), illustrating how likely individuals of varying abilities are to answer the item correctly.

ICC : A plot of the total test scores on the horizontal axis versus the proportion of examinees passing the item on the vertical axis

The simplest ICC model is the Rasch Model (Rasch J,1966) which is based on two assumptions :

- test items are unidimensional and measure one common trait,

- test items vary on a continuum of difficulty level.

Key Component of Item Characteristic Curve (ICC)

- Slope (Discrimination): The steepness of the curve indicates how well the item differentiates between test-takers of different ability levels.

- Difficulty: The point on the ability scale where the probability of a correct answer is 50% shows the item’s difficulty level.

The desired shape of the ICC depends on the purpose of the test. If the ability to solve a particular item is normally distributed, the ICC will resemble a normal ogive (s-shaped) like a “Curve a” in above figure (Lord & Novick, 1968). “Curve b” would be the best for selecting examinees with high levels of the measured trait since shows high discrimination power as well as high item difficulty. “Curve c” indicate very difficult item since even members having high ability can not solve the problem effectively. Also it has low discrimination power, hence this item should be either modified or discarded.

Application of Item Characteristic Curve (ICC)

ICC theory seems particularly appropriate for certain forms of computerized adaptive testing (CAT) in which each test taker responds to an individualized and unique set of items that are then scored on an underlying uniform scale (Weiss,1983). The CAT approach to assessment would not be possible in the absence of an ICC approach to measurement.

Conclusion

In conclusion, item analysis plays a crucial role in the development and refinement of psychological tests, allowing test makers to evaluate each item’s quality and effectiveness in measuring specific skills, traits, or abilities.

Item difficulty ensures that test questions are appropriately challenging and aids in identifying items that are too easy or too difficult, which might not effectively measure variations in test-taker ability. Meanwhile, item discrimination allows for selecting items that best differentiate between high and low performers, enhancing the test’s validity in assessing the target construct.

The Item Characteristic Curve (ICC), as part of Item Response Theory, further refines item analysis by providing a visual and statistical understanding of how well an item functions across different ability levels, incorporating factors like difficulty & discrimination.

Together, these elements of item analysis contribute to building robust, reliable, and meaningful assessments that accurately measure and differentiate among individuals’ abilities or traits.

Reference for Item Analysis

- Anastasi, A. & Urbina, S. (2009). Psychological testing. N.D.: Pearson Education.

- Cohen, R., & Swerdlik, M. (2009). Psychological Testing and Assessment: An Introduction to Tests and Measurement (7th ed.). New York: McGraw Hill.

- Hogan, T. P. (2003). Psychological testing: A Practical Introduction. For Dummies.

- Kaplan R.M.& Saccuzzo D.P.(2005) Psychological Testing, Principles, Applications and Issues. Sixth Ed. Cengage Learning India, Pvt Ltd.

- Singh, A.K. (2006). Tests, Measurements and research methods in behavioural sciences. Patna: Bharati Bhavan.

Niwlikar, B. A. (2024, November 20). What Is Item Analysis? 2 Methods to identify Best Test Items for Test. Careershodh. https://www.careershodh.com/item-analysis-2/